Making Natural Language Processing work for Little Training Data

The Data Scarcity Problem

In recent years, deep learning has been developing steadily, showing great performance in solving machine learning tasks. In the ROXANNE project, deep learning is applied to solve a variety of tasks.

One of the key ingredients that makes deep learning so powerful is the access to a large amount of training data. For example, many state-of-the-art image classifiers are trained on the ImageNet [1], which contains 1.4 million images. If only a small amount of training data is available, it is often hard for the deep learning models to extract useful knowledge from it and hence it could result in poor performance.

Depending on the tasks, collecting a large training dataset may involve great human efforts. Figure 1 shows a single training sample for the Named Entity Recognition (NER) task. To form such an NER dataset, the annotators have to go through all the documents word by word and assign the corresponding label for each word. This annotation process is not only tedious, but also costly and time-consuming. Obviously, it is impractical and even infeasible to gather a dataset for every task. This is known as the data scarcity problem. Recently, much efforts have been dedicated to making deep learning work on little training data. This is what we are focusing on in ROXANNE in view of the real-world tasks we are tackling where many of them do not come with a large training set.

Figure 1 A training sample (sentence + corresponding labels) for the NER task. The ORG label stands for organization (Arsenal is an organization). The PER label stands for person. The O label stands for "not an entity".

We found that the combination of distant supervision and noise-robust Deep Neural Networks (DNNs) is an effective way to overcome the data scarcity problem. It works well on several natural language processing tasks while requiring only little human efforts in data collection. This approach is introduced in the following sections.

Distant Supervision

Distant supervision provides a quick and cheap way to automatically obtain a large training set. We take the NER task as an example. In NER, one could define some heuristics or mapping rules that can assign labels to the text. For example, Adelani et al. [2] developed some simple rules to recognize the entities appearing in the text written in Yorùbá (See Figure 2). With the help of these heuristics/rules one can collect a labelled NER dataset without human efforts to annotate.

The labels obtained by distant supervision contain noise, i.e. not all labels derived by the pre-defined rules are correct, otherwise we would already have a perfect model for NER. Learning from such noisy datasets could mislead the DNNs since wrong labels produce the wrong learning signals. Consequently, DNNs will fail to generalize. In other words, distant supervision only enhances the quantity of the data, not the quality. To make the DNNs successfully learn from the noisy datasets, specific algorithms are needed that can make DNNs robust against the noise. The robustness here means that the DNNs should not be misled by the wrong learning signals.

Figure 2 Named-Entity Labels derived by a set of pre-defined rules. The text is in Yorùbá. Labels in green indicate that the derived labels are correct. Labels in red indicate that the derived labels are incorrect.

A Noise-Robust Learning Algorithm

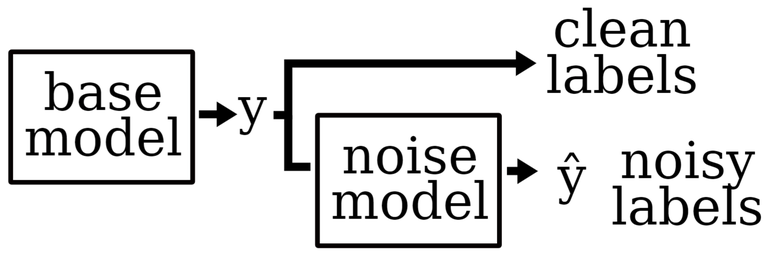

There are several methods to make the DNN models robust against the noise. Here we introduce the method proposed by Hedderich et al. [3] which is used in the ROXANNE project. The authors used a noise model to model the noise distribution in the noisy dataset. Figure 3 shows the structure of the model. This method works successfully on the NER datasets generated via the distant supervision techniques.

To initialize the noise model, one needs a small amount of labelled data C which is annotated by humans. We call C the clean data as we assume human annotations are noise-free. Distant supervision is applied to obtain a large noisy dataset N. The size of C is normally one or two magnitudes smaller than D. The final training set D for the model is the union of C and N. As the annotations in D are noisy, we observe a noisy version of the data distribution. The base model (a DNN based NER classifier in this case) models the clean distribution of the label y given a sample x. The noisy model then converts the clean distribution predicted by the based model to a noisy one. During training, the noise model is optimized to approximate the noisy distribution observed from the noisy training data, while the base model still models the clean distribution. At inference time, the noise model is dropped, and the trained base model is used for the NER tasks. For more details, please refer to [3].

Figure 3 Visualization of the general noise model architecture. The base model works directly on the data and predicts the clean label y. For noisily labelled data, a noise model is added after the base model’s predictions.

Reference

- Fei-Fei, ImageNet: crowdsourcing, benchmarking & other cool things, CMU VASC Seminar, March 2010

- Adelani, David Ifeoluwa, et al. "Distant Supervision and Noisy Label Learning for Low Resource Named Entity Recognition: A Study on Hausa and Yorùbá." arXiv preprint arXiv:2003.08370 (2020).

- Hedderich, Michael A., and Dietrich Klakow. "Training a Neural Network in a Low-Resource Setting on Automatically Annotated Noisy Data." Proceedings of the Workshop on Deep Learning Approaches for Low-Resource NLP. 2018.